When I wrote about how software 2FA is a step-up from SMS 2FA, I also mentioned how there’s one attack vector that software 2FA is still vulnerable to phishing. It’s the user who must verify they are entering the passcode into the legitimate website, and bad actors exploit that.

Phishing is a hard problem to solve because the bad actor exploits human behavior that has been ingrained in all of us for the last couple hundred thousand years and will probably continue to be for a long time in the future.

Everybody is prone to fall for phishing campaigns, even security-conscious people, because these attacks are never head-on, explicit attacks, but sneaky ones and when you aren’t expecting them.

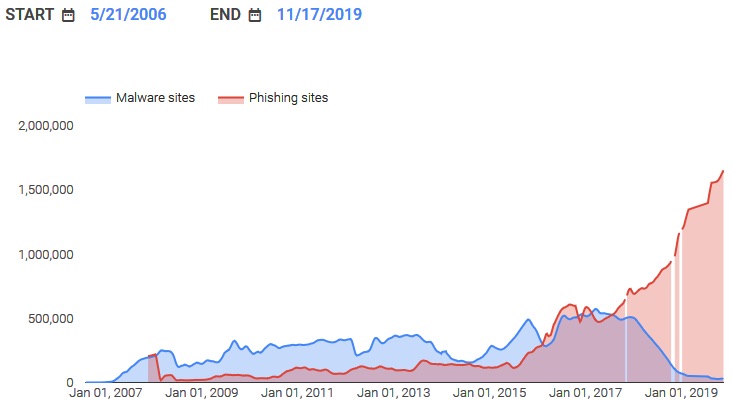

And if we look at types of attacks over the years, there is a clear trend: the number of websites containing malware has been declining, while the number of phishing websites skyrocketed in the last few years. You can see that in the chart below:

Source: Bleeping Computer via Google Transparency Report (archive)

Attackers are becoming more proactive and trying to force errors. With more devices connected to the Internet, these attacks become more profitable (we’ve seen a surge during the last few years), and now more companies are starting to pay attention to phishing attacks.

Taking the human element out of security is not an easy task, but that is what Universal 2nd Factor, or U2F, tries to do — it’s an open protocol for second-factor hardware keys that doesn’t rely on the user to make sure he’s on the right website; there is a built-in mechanism on the key itself to verify that.

How The U2F Protocol Was Created

In 2011, the founders of Stockholm-based Yubico would relocate to Silicon Valley to be in close contact with tech companies to further develop the protocol used by the YubiKey into a universal standard.

Note: many hardware tokens, such as the YubiKey 5, support more types of authentications on top of U2F (e.g., TOTP), but references to hardware tokens throughout this article refer specifically to U2F.

In that same year, they started discussions with Google’s internal security team and together identified YubiKey as the best solution to solve the employee phishing problem Google was having at the time.

One year later, in 2012, Google and Yubico signed a partnership to co-create the U2F — an open standard for two-factor authentication based on public-key cryptography that would remove the need for the user to verify that they were, in fact, at the intended website.

They tested the USB token Yubico had created with Google employees, and one year later, they joined the FIDO Alliance board and brought along with them the technical specifications to the protocol. The protocol continued to be improved over the years, being released publicly for Chrome and Gmail, along with many other products.

In 2019, the World Wide Web Consortium (W3C) announced Web Authentication API (WebAuthn), the new global standard comprising the implementation of FIDO U2F security keys in most modern browsers. By that time, Google already eliminated phishing attacks by having its 85,000 employees use hardware tokens.

How Hardware 2FA (or U2F) Works

When you think of a real-world key, you probably imagine that the same key you use to lock something is also used to unlock it. If you have a chest with all of your passwords stored inside, this setup works because you’re the only one who needs to access it.

But what would happen if you wanted to share a password securely with me (and only me)?

Well, here’s an option. I could get a chest and put it outside my door. Then I could leave the key on a key holder outside, and you would come over, put the password inside the chest, lock it, and put the key back on the key holder. As soon as I get back home, I would take the keys, open the chest, and pick up the password you put in there.

This approach is far less than ideal because we can’t be sure who else used the key to open the chest and read the password. I also can’t be sure that you, or anyone else, didn’t make copies of my key to open my chest.

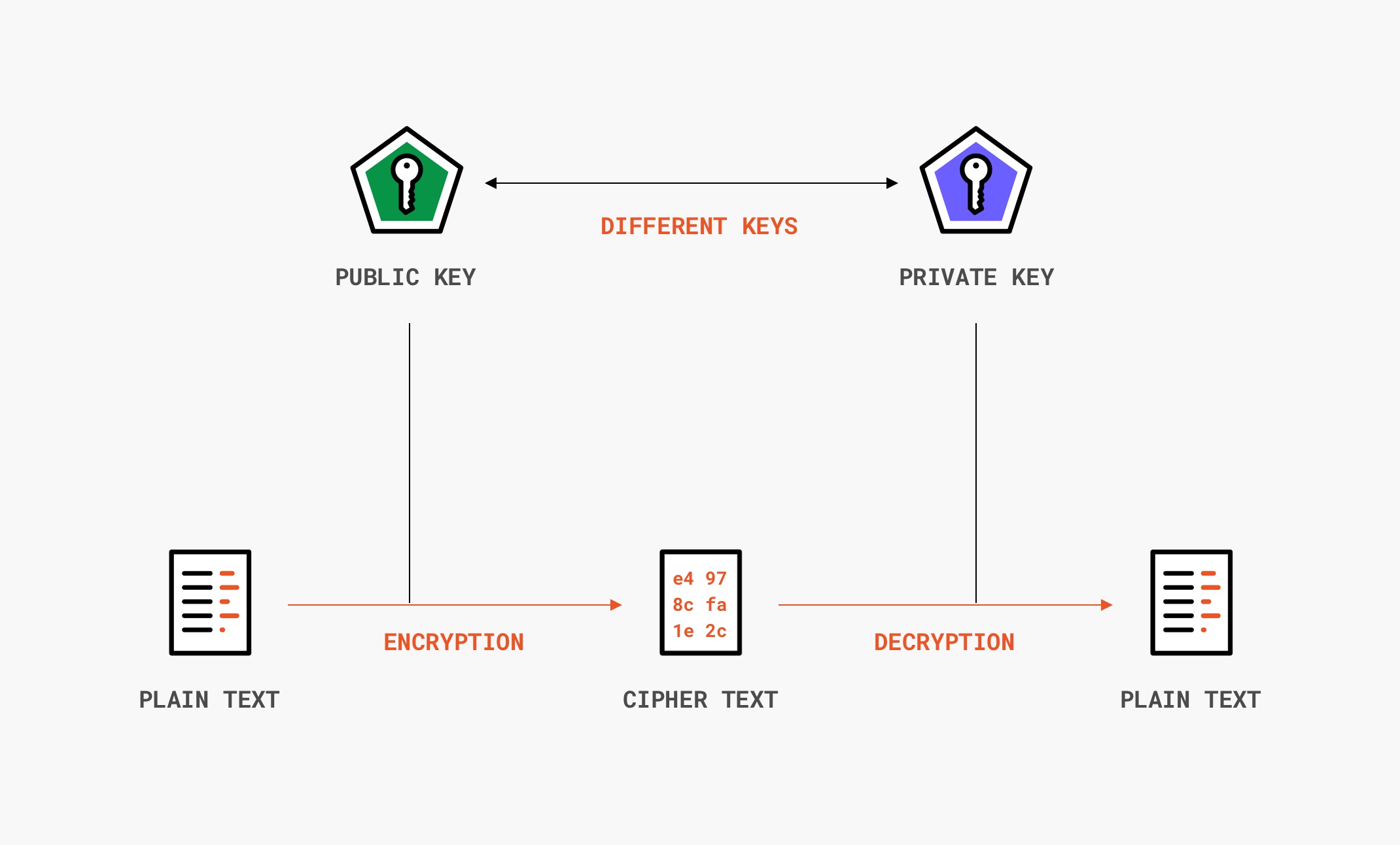

The solution to this problem is public-key cryptography, and it’s the principle used by U2F tokens to authenticate users. In public-key cryptography, instead of using just one key, we use a pair of keys — if you lock the chest with a certain key, only the other key can unlock it.

Now I can keep one key — my private key — and put the other one outside for you to use — the public key. And since only my private key can unlock the chest, once you lock it, no one else can use the key I left outside to peek inside the chest.

In cryptography, these keys are called a key pair and are mathematically linked. I could create as many public keys from a private key as I wanted, but you can’t recreate my private key from a public key.

Key pairs can be created using software, but in the case of U2F, the private key is generated using a unique secret that is embedded during the manufacturing process and can never leave your U2F token, which is what makes it so secure.

The process to start using U2F in an account is simple and happens in two steps: registration and authentication.

Registration

This step involves four parties:

- the user,

- the hardware token,

- the client (e.g., a browser),

- and the remote server.

To register a security token, the user needs first to authenticate through another method, e.g., with username and password, or while he’s creating the account for the first time. After the user signals they want to register a U2F token, the server sends a challenge — a random number — and an AppID to the user’s device.

The AppID is a unique identifier for the app — on a browser, the AppID is the URL, like

auth0.comandroid:apk-key-hash:<hash-of-apk-signing-cert>ios:bundle-id:<ios-bundle-id-of-app>Once this information is received, the user will be prompted to press a button on the hardware token to confirm the request. This will prevent remote attacks even if the key is inserted into the user’s device.

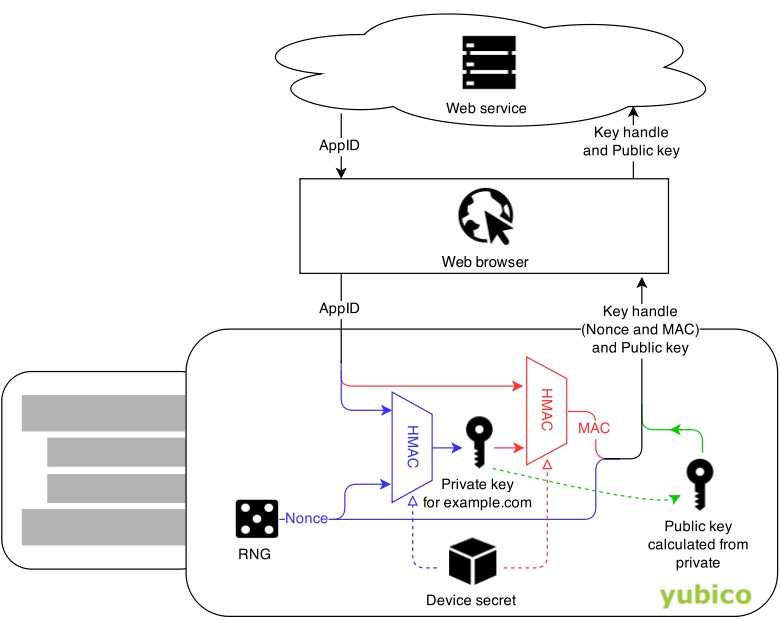

Source: Yubico

The hardware token will generate a nonce and hash it together with the AppID and the secret key on the token using HMAC-SHA256 to create a private key. From this private key, a public key is derived, along with a checksum. Check the diagram below:

The nonce and public key are sent back to the server to be stored for later when the user comes back to authenticate.

Authentication

The user will normally authenticate with their login and password. The server will remember that this user has a security token registered and will generate a new challenge to send back along with the AppID and nonce created on the registration phase.

The browser will once again ask the user to press a button on their physical token, and the token will use the received information to re-create the same key pair is created during the registration phase.

If the information was sent from a legitimate server, it should result in the same public key since the secret inside the device didn’t change. The device will then encrypt the challenge sent by the server with the private key and send it back to the server.

However, because the domain is hashed together with the device secret, if you’re on a phishing website, the token will generate a different key, and the checksum will fail.

The challenge can only be decrypted by the public key created with that same private key, which the server already should have stored from the previous phase. The server will try to decrypt it using the public key it already has, and if the challenge matches with the one the server sent, the user is authenticated.

What Makes Hardware 2FA Better than Software 2FA?

Hardware 2FA brings several security benefits over software 2FA. The most obvious one is phishing resistance. By using the website domain in the key generation process, the token assumes what normally would be a user responsibility — that of verifying that the website they are logging in to is legitimate.

It’s also more leak-resistant than software 2FA. We’ve seen that software 2FA works on the basis of a shared secret between user and server ahead of time, from which the passcode is derived. But if the shared secret is leaked from the server, anyone can use it to generate the correct passcodes.

If the user has secured their account with U2F, however, and the public keys and key handle on the server get leaked, the attacker still won’t be able to take over the account because he’d still need the private key to sign the payload and authenticate themselves.

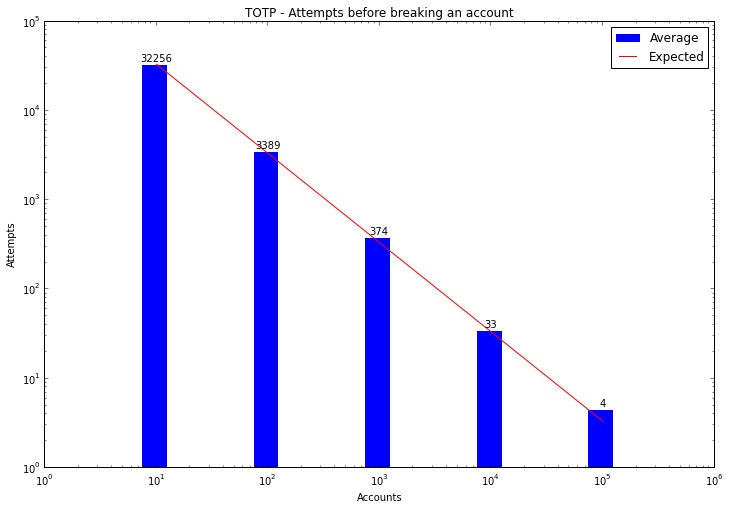

Not only that, a U2F token can’t be exploited remotely by automated bots. Most keys require a physical touch on the device to sign the payload, so they aren’t vulnerable to the brute-force attacks that can occur in improperly implemented TOTP systems (e.g., not taking into consideration user behavior, geolocation, number of bad attempts before locking down the account for a determined amount of time).

In a scenario where the attacker has acquired a list of compromised passwords, brute-forcing any individual account by going over all possible 1,000,000 passcode possibilities is increasingly trivial with a big enough list:

Source: The trouble with TOTP.

An automated attack like that can’t happen on U2F-enabled accounts. Also, all cryptographic operations happen inside the token. By the time the private key leaves the token, it already has been hashed using SHA-256, so even if the user doesn’t know that the client is compromised, it’s still safe to use the token.

In terms of usability, in an internal study at Google, they found that U2F allowed employees to authenticate as much as four times as fast as using an authenticator app.

And as with software 2FA, users can assign multiple tokens as backups in case they lose one — the only problem here is that each key additional key will cost money (presuming the user already had multiple devices in where to store the TOTP seeds), and considering the already low 2FA adoption rates, it’s something to be considered carefully.

In some cases, though, there might be a decrease in costs by mandating hardware tokens.

It’s what the study above found: initially, Google tested the YubiKey internally with 50,000 employees, and they found that not only internal accounts protected with YubiKey were much more likely to be secure, they also saw a 92% reduction in support incidents.

In the end, this allowed Google to save money in support hours and costs related to compromised accounts by issuing multiple backups per employee.

In fact, requiring backups may also be one of the downsides of hardware 2FA: having no backups can be extremely problematic if you lose your token. You’ll be at the mercy of the admins to let you in again — and in some cases, like in that of password managers where your vault is end-to-end encrypted, not even they can help you recover your account.

Driving Up The Adoption Of U2F

The biggest problem with U2F right now is adoption — it’s low even among users who use any 2FA (according to this 2018 report, less than 10% of Gmail users enabled any kind of 2FA), and 2FA adoption overall is already low. In this case, it’s a two-sided adoption problem:

- Users need to buy a hardware token to use U2F.

- Applications need to implement the protocol to allow users to use them in the first place.

And as you can see here, U2F is not nearly as well-supported as SMS and software 2FA. Surprisingly, even applications you would expect to support U2F still don’t, such as some of the password managers.

If you want to incentivize your users to use U2F, Auth0 now supports WebAuthn with FIDO (U2F) security keys, so you can easily offer it as an option for your users. For more information, visit Auth0 Docs.

About the author

Arthur Bellore

Cybersec Writer